24 Hr QPF ending at 12 UTC on:

ENSBC Forecast (60hr)

|

Observed(Stage IV-final)

|

Objects (2.00 inch)

|

CONUS Daily CSI Ranking:

|

Background

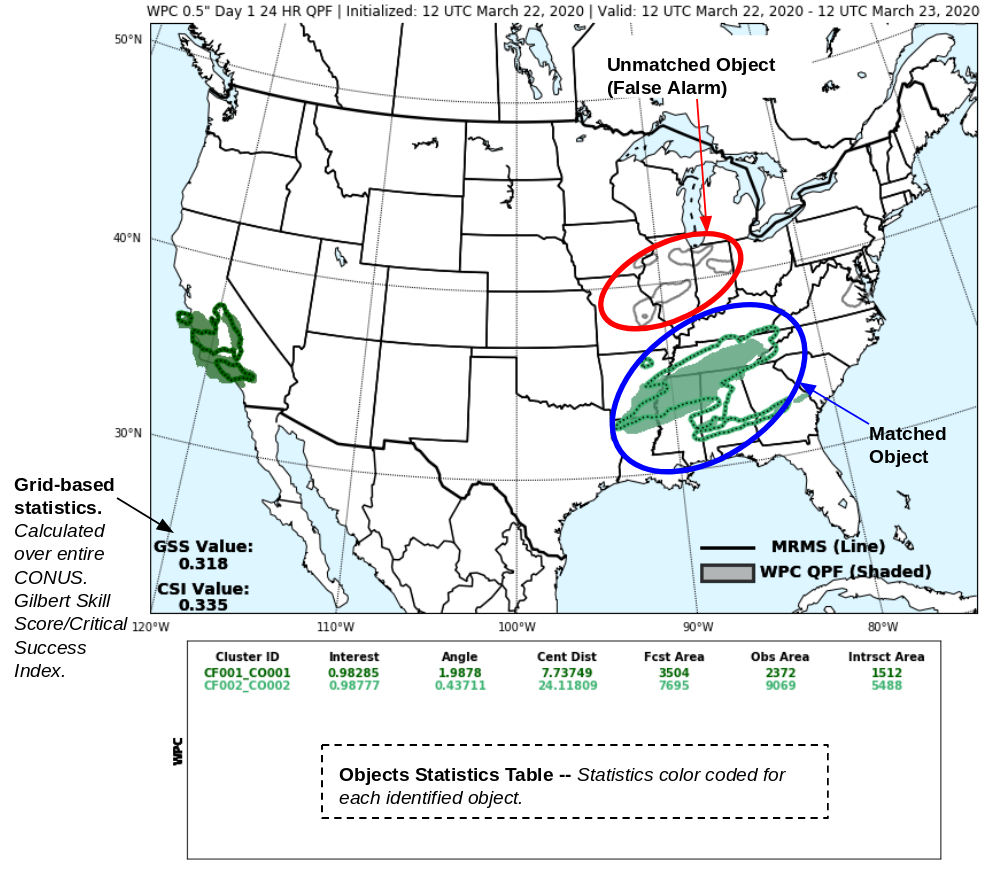

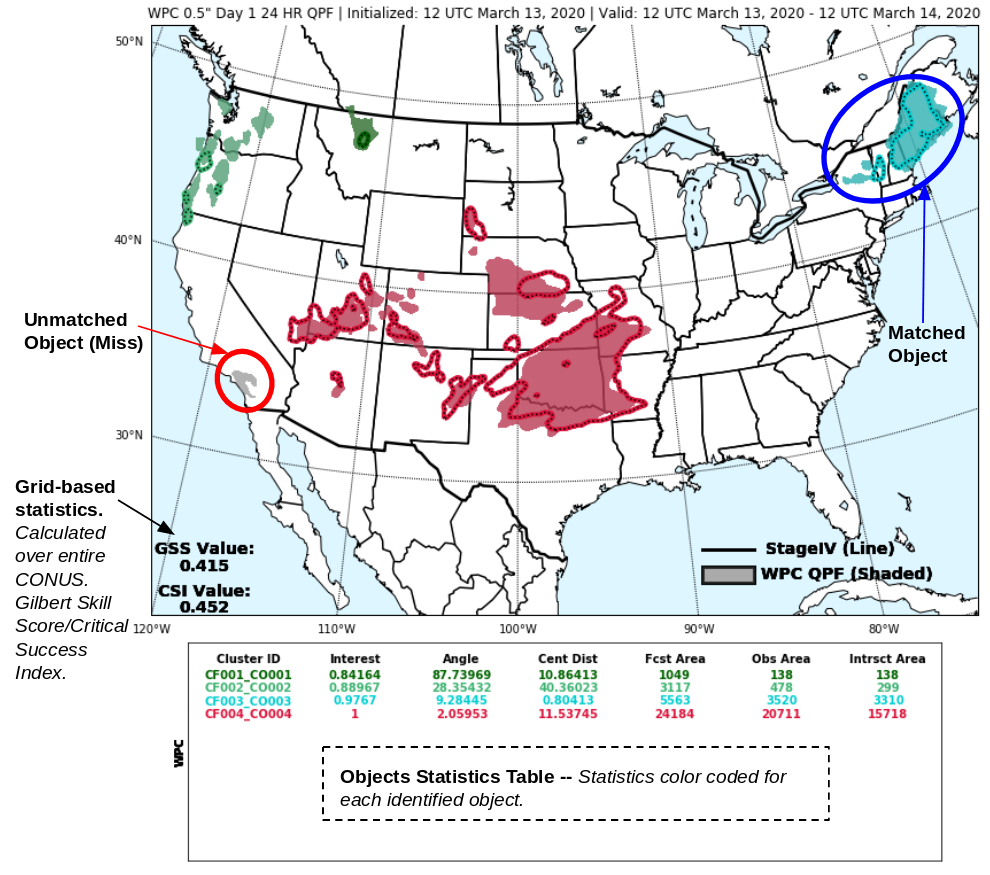

The Method for Object-Based Diagnostic Evaluation (MODE) is an object-oriented

verification technique that is part of the

Model Evaluation Tools (MET) verification package developed by the

Developmental Testbed Center (DTC)

. Object-oriented verification methods have been developed in an attempt to better

account for spatial discontinuities between forecast and observed precipitation as well

as provide more specific information about forecast quality than can be obtained from

traditional verification measures (threat score, bias, etc.) alone. These techniques

are considered particularly useful for evaluating high resolution model guidance.

Traditional verification methods often struggle to accurately assess the performance of

high resolution models since even small spatial errors can result in the forecast being

penalized twice (once for missing the observed precipitation and a second time for giving

a false alarm). The goal of the MODE tool is to evaluate forecast quality in a manner

similar to a forecaster completing a subjective forecast evaluation.

How it Works

MODE identifies precipitation objects in both the forecast and observed fields at a

number of different thresholds. It then uses a variety of object characteristics (ex:

distance between objects, size of objects, angle of orientation, etc.) to determine the

degree of similarity between objects in the forecast and observed fields. Objects that

are found to be similar to one another are considered “matched”, while those that are

not similar are considered “unmatched”. Unmatched objects in the forecast

field are equivalent to a false alarm. Unmatched objects in the observed field are

equivalent to a miss. Examples of the graphical verification output produced by MODE

are below. Matched objects are indicated in the same color in both the forecast and

the observed fields. In the cases below, the colored objects are matched with one another.

Unmatched objects are always displayed as gray. Gray contours like in the example on the left

represent an unmatched forecast objects (false alarm) and gray shaded areas like in the example

on the right in California represent an unmatched observation object (miss).

In addition to the graphical output, MODE also provides statistical information for each

matched object. This information includes a parameter called the interest value, which is

an overall measure of similarity between objects in the forecast and observed fields. The

interest value ranges from 0 to 1, with a value of 0.70 required for objects to be considered

matched. In addition to the interest value, MODE also provides information about the following: centroid

distance: the distance between the center of a forecast object and the center of the corresponding

observed object (smaller is good); angle: for non-circular objects gives a measure of orientation

errors (smaller is good); forecast area: the number of grid points enclosed in a forecast object;

observation area: the number of grid points enclosed in the analysis object; intersect area: the number of grid

points in both the forecast and the observation objects. These stats can be found in the table below the image

and are color coded to match each object that is identified.

MODE at WPC

MODE verification is available for Day 1, Day 2, and Day 3 forecasts of 24 hr precipitation valid

at 1200 UTC. The preliminary verification uses

MRMS

radar-derived precipitation observations and is available the same day. The final verification uses

Stage IV precipitation

observations and is available two days later. In addition to the WPC forecast, verification is also

available for model forecasts from the GFS, ECMWF, NBMv3.2, NAM 12km, CMC, UKMET, NAM CONUS Nest, HRRR, HREF Blended Mean, GEM Regional, the

NCEP high resolution window runs (ARW, ARW Member2, and NMMB), and a WPC-generated multi-model ensemble bias corrected

(MMEBC; see presentations

MMEBC QPF Part I and

MMEBC QPF Part II).

All forecasts and observations are re-gridded to a 5 km grid prior to verification. In order

to compare WPC forecasts to the model data available at the time they were generated, the forecast

lead times are offset such that the 24 hr (Day 1), 48 hr (Day 2), and 72 hr (Day 3) WPC forecasts are

compared to the 36 hr, 60 hr, and 84 hr model forecasts, respectively. Some select MODE settings can be found here:

MODE Settings

Additional References

Davis, C., B. Brown, and R. Bullock, 2006: Object-based verification of precipitation

forecasts. Part I: Methodology and application to mesoscale rain areas. Mon. Wea. Rev.,

134, 1772-1784.

Davis, C., B. Brown, and R. Bullock, 2006: Object-based verification of precipitation

forecasts. Part II: Application to convective rain systems. Mon. Wea. Rev., 134,

1785-1795.

Model Evaluation Tools (MET) was developed at the National Center for Atmospheric Research

(NCAR) through grants from the United States Air Force Weather Agency (AFWA) and the National

Oceanic and Atmospheric Administration (NOAA). NCAR is sponsored by the United States National

Science Foundation.